The Organisation for Economic Co-operation and Development (OECD) is a worldwide organisation which works for building policies for an overall improvement of lives. The Organisation works with the citizens, governments and policy makers to establish rational evidence-based international benchmarks and providing solutions to a range of socio-economic and environmental challenges which help in enhancing economic performance, creates jobs to foster solid education, fighting international tax evasion and advices on the public policies and international standard setting. The OECD constitutes of 37 member countries from all around the world with including major countries like United States, Germany, United Kingdom, Canada and Australia. Costa Rica is recently supposed to be added to this list to be the 38th member. The Key Partner countries who work with OECD but are not member of the organisation are Brazil, India, China, Indonesia and South Africa

The Artificial Intelligence (AI) is a multi-use technology which is likely to advance well-being and welfare of the people, enhance innovation and productivity, create sustainable global economy and can help in solving major worldwide challenges. With AI being deployed in various sectors like production, transport, healthcare, security and finance it creates challenges in the economy and the societies majorly in economic shifts and inequalities, competitions and implications for human rights and democracy. The empirical studies and the analytical measurements carried out by the OECD provides an overview of the AI technical landscape, socio-economic impacts, major policy considerations as it describes the AI government initiatives and stakeholders at a global stage. This work provides a background for standardizing the policy environment to adopt AI in society. The OECD Committee for Digital Economy Policy (CDEP) created a Council Recommendation which promotes the human centric approach to foster trustworthy AI and keeping in mind the economic incentives to the innovation. The Council Recommendation is complemented by the existing OECD standards like data and privacy, digital security, ethical business practices and risk management. This Recommendation on Artificial Intelligence (AI) is one of the first intergovernmental standard on AI which primarily aims to mature innovation and faith in AI.

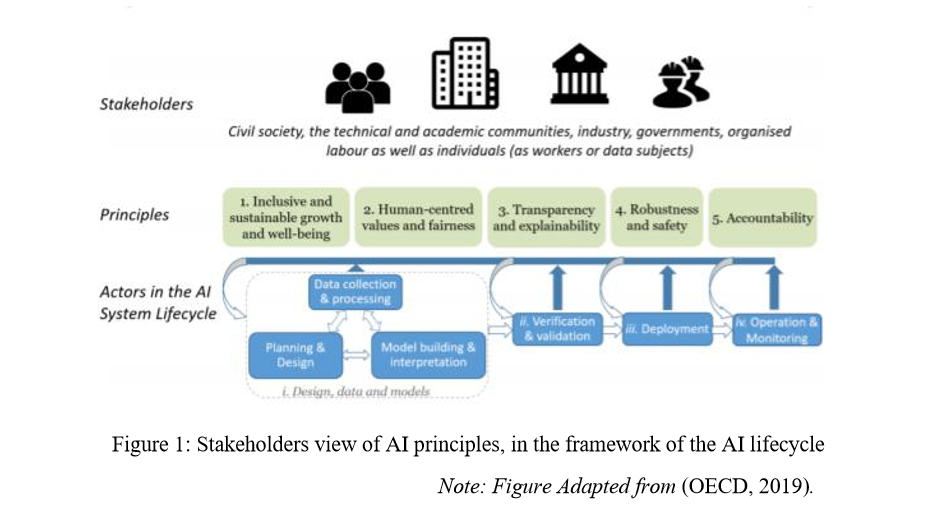

The standard set by the Framework/Recommendation is implementable and flexible enough to catch up with the ever evolving field of AI. The framework understands that AI has pervasive and far reaching effects and worldwide implications which are developing new societies and economic sectors. The framework also recognizes that AI can be used for contributing to the positive sustainable economic activity and increase the innovation and productivity to solve key challenges. The Framework identifies five complementary principles for the responsible stewardship of trustworthy AI and calls for organisations and individuals who deploy or operate AI to implement these principles which are as follows: (OECD, 2019)

1. INCLUSIVE GROWTH, SUSTAINABLE DEVELOPMENT AND WELL-BEING:

The development of AI should be inclusive, sustainable and innovative and the Stakeholders should actively engage in responsible stewardship of AI so that the outcomes are beneficial for the society. The society’s well-being, sustainable development and inclusive growth could be improved by augmenting human capabilities and human computer interaction, enhancing innovation by including the minority populations, minimizing the socio-economic, gender and racial gaps and protecting the natural habitat and environment.

2. HUMAN-CENTRED VALUES AND FAIRNESS:

The rules and regulations of the laws, democratic values and human rights should be respected by the AI actors throughout the lifetime of the system. These rules include the privacy and data protection, freedom, autonomy and dignity, equality and fairness, diversity and social justice along with the internationally recognised labour rights. There should be mechanisms and safeguarding principles for the capacity of human determination which are consistent and valid to the context with the new technological developments. The AI systems should allow for human intervention where individual determination over the digital identity and personal data are insufficient promoting human centred values.

3. TRANSPARENCY AND EXPLAINABILITY:

The AI actors should be transparent and responsible in disclosing the AI systems. The actors should provide the appropriate context and meaningful information which will help in increasing the general understanding of AI systems among the people and the stakeholders. The people who are affected by the AI system should be aware of the decision making process, logic served for the prediction or recommendation and all the other factors. The people should have the right to challenge these outcomes at any given time.

4. ROBUSTNESS, SECURITY AND SAFETY:

The design of the AI systems should be secured, robust and safe throughout their lifecycle such that it accommodates the conditions of normal use, extreme adverse conditions and unknown circumstances which would not pose a safety risk. The AI actors should ensure that the decisions made by the AI systems is completely traceable to the databases and the knowledge which caused the system to make such a decision. The Risk Management Approach in each of the phases of the development of the AI systems should be considered based on the role, context and the actions of the system addressing the risks of bias, privacy and digital security.

5. ACCOUNTABILITY:

The AI Actors should be held responsible and accountable for the functioning of AI systems and respect the principles above based on the context, roles and consistency a with the state of the art developments.

NATIONAL POLICIES FOR TRUSTWORTHY AI:

The Governments are supposed to develop policies to promote trustworthy AI systems in consideration with all the stakeholders to achieve mutually beneficial and fair outcomes for the societies with consistency of the above principles. These are the recommendations to the governments and they are (OECD, 2019):

Investing in AI Research and Development:

The Governments should adapt and boost lasting investments in the inter-disciplinary research and development of AI to outgrow innovation in AI which would focus on technical issues along with the AI social implications and policies. Governments should also encourage private investment in open datasets which respect privacy and follow the data protection laws to support AI research which is unbiased and improve the use of frameworks and standards.

Fostering a Digital Ecosystem for AI:

The Governments should be able to foster the development and access to the digital ecosystem in which AI systems are built and are operating. The use of this ecosystem will primarily be for sharing of AI knowledge. Mechanisms like Data Trusts are should be used by the governments which are safe, fair, legal and enable ethical sharing of data.

Shaping an Enabling Policy Environment for AI:

The Governments should promote a policy environment which is adaptable with agile technologies and transitions right from the beginning of the project stage to the deployment phase for the trustworthy AI systems. The Governments should review and customise as appropriate the different policies and frameworks for assessment in which way they apply to the AI systems to encourage innovation and competition.

Building Human Capacity and Preparing for Labour Market Transformation:

TheGovernments should closely work with the various stakeholders involved in the transition from the current society to the digital society. People should be empowered to interact with AI systems in diverse applications and be provided with the necessary skills and training. The Government can carry out activities like training programs for people to learn and support for the people displaced because of the AI systems into the labour market

International Co-operation for Trustworthy AI:

The Governments of the developing nations along with all other stakeholders should co-operate to implement these principles and advance on responsible stewardship of AI. The Governments should work together to advance the sharing of AI knowledge by promoting multi-stakeholder, consensus driven global technical regulations for the interoperability of AI. The Governments can also develop global standards and metrics for the measurement of AI development and research and should empirically asses the progress and implementation of these principles.

STAKEHOLDERS:

The Stakeholders consists of all the private and public domain individuals and organisations who are involved in or affected by the AI systems, directly or indirectly. The key stakeholders in the OECD Framework are the internal and external stakeholders. The internal stakeholders are the individuals, organisations or AI actors who actually take part in the framework process and create it, whereas the external stakeholders do not actually take part into the modelling of the framework but are directly or indirectly affected by it. AI actors are a subset of the stakeholders are people who play an active role in the AI systems. Private or public domain organisations or individuals who obtain AI systems and operate/deploy them are included in this list. The various AI actors are technology developers, inter alia, service and data providers, system integrators, and software/business analysts.

The external stakeholders who are indirectly affected are the people who will be the products of the AI systems when the governments have set the regulations and standards. The various organisations like civil society, the technical and academic communities, industries and governments, labour and trade unions and representatives and the individuals like workers or data subjects will also be affected by the AI systems.

OECD FRAMEWORK GOVERNANCE:

The OECD organisational structure consists of the Council : Oversight and strategic direction (Decision Making Body), Committees : Discussion and Review (Expert and Working Groups) and the Secretariat : Evidence and Analysis (carry out the work of OECD).

The OECD instructs the Committee on Digital Economy Policy (CDEP) to monitor and control the implementation of the Framework for continued 5 years after it has been adopted and regularly after that to report to the Council. In order to effectively implement the Framework Recommendations the Council instructed the CDEP for developing a practical guidance for implementation in the form of a forum where information on AI and activities and polices can be exchanged. This would help in fostering multiple stakeholders and interdisciplinary dialogues. Thus, OECD AI Policy Observatory was established which is a public policy hub which goals to help nations encourage, develop, monitor and nurture trustworthy AI for the benefit of the society. The AI Policy Observatory includes a live database of the AI strategies, initiatives and policies of the member countries and the stakeholders who can create, share and update the progress of the implementation, which enables comparing the key elements in an interactive way. The Policy Observatory is continuously updated with the AI policies, measurements, metrics and good practices which fosters the guidance and practical implementation.

The inclusive platform of AI Policy Observatory is oriented with the 3 core attributes:

MULTIDISCIPLINARITY: The Observatory interacts with the communities which creates the policies on the digital, consumer protection, transport and education, science and technology and economic sectors.

EVIDENCE- BASED ANALYSIS: The Observatory is the central hub for the collection and sharing of evidence and data on AI and the progress of the countries, leveraging the reputation of measurement methodologies and empirical analysis of the OECD.

GLOBAL MULTI-STAKEHOLDER PARTNERSHIPS: The Observatory helps in engaging the governments in an ultra-wide spectrum of the stakeholders which includes the technical community partners, civil societies, educationists, private domain and many other international organisations providing a collaboration and dialogue hub.

The OECD AI Policy Observatory compares the policy responses and provides the data metrics to inform policy. This Forum is where the member countries and organisations demonstrate their actions in accordance to the framework. The live AI news, Country-wise dashboards and Live Data helps in understanding the developments in the nations and helps the organisations be accountable for their behaviour and actions. The OECD has the metrics & methods to measure the developments of AI. One of these metrics uses the values from the scientific publications and the Open Source Software and patents and finds the marked increase in the field of AI Development (Baruffaldi, 2020). Thus, the overall governance of the OECD Framework is carried out with the help of the OECD AI Policy Observatory.

The OECD Framework is one of the emerging AI Framework and is implemented in different nations. This Framework is also the basis for the G20 Human-Centred AI Principles for the world leaders of G20. The Framework effectively covers the different aspects of the ethical designs mentioned by the Ethical Design Principles (Beard & Longstaff, 2018). The OECD Framework is not mandatory to the member countries but should be used by the countries and the governments to foster the development of trustworthy AI among the societies. The fact that many of the governments have already adopted the framework and are implementing the standards of the frameworks states that the Framework is a comprehensive framework for the development of AI.

We cannot predict the future, but we definitely can prevent it from being an anarchist one, by implementing standards and measures which will restrain the power of the technology and show us the way ahead. The framework principles are created with the help of more than 20 governments, business leaders, civil societies, labours and scientific and academic communities. The decisions laid by these groups will lay a foundation for economic growth and stability among the increasing global challenges like globalisation and recession. My question is, when the technological revolution comes will these groups make the right decision for the common people or for their own individual growth?